[NOTE: I originally posted this in August of 2012. However, I am seeing clients who would fall into the definition Atwood uses for "madness."]

I am reading George Atwood's

The Abyss of Madness (Psychoanalytic Inquiry Book Series)

(2011) as part of an intersubjective, relational psychoanalytic study

group I have been a part of for the last two and a half years. Aside

from a few essays, this is is the first work I have read that is

specifically Atwood's own thinking (most of the other books he had

co-authored with Robert Stolorow).

Here is the description of the book from Amazon:

Despite the many ways in which the so-called psychoses can become

manifest, they are ultimately human events arising out of human

contexts. As such, they can be understood in an intersubjective manner,

removing the stigmatizing boundary between madness and sanity. Utilizing

the post-Cartesian psychoanalytic approach of phenomenological

contextualism, as well as almost 50 years of clinical experience, George

Atwood presents detailed case studies depicting individuals in crisis

and the successes and failures that occurred in their treatment. Topics

range from depression to schizophrenia, bipolar disorder to dreams,

dissociative states to suicidality. Throughout is an emphasis on the

underlying essence of humanity demonstrated in even the most extreme

cases of psychological and emotional disturbance, and both the

surprising highs and tragic lows of the search for the inner truth of a

life – that of the analyst as well as the patient.

I very much like the way he conceptualizes these issues, even when I do

not agree with his perspective on the mind. The way he talks about

mental illness feels right in terms of how the client experiences it,

and in the relational/intersubjective model, meeting the client in his

or her own reality is essential.

When he speaks of madness in these passages, he is referring to

psychosis and schizophrenia, or even bipolar disorder in its manic

stage. These are not cases of simple depression, although there is

certainly some similarity at a much lower intensity. And all these terms

are things that he rejects as scientific defense mechanisms against our

own fears of the abyss and what that means for our shared sense of

being human.

It's worth bearing in mind that the psychoanalytic school refused to

treat the "psychotics" for decades following Freud based on his

assumption that they were not amenable to treatment.

Harry Stack Sullivan,

in the late 1920s, was one of the first psychoanalytically trained

therapists to work with schizophrenics, and he did so based on his

"problems with living" definition of mental illness. Sullivan was also

one of the first psychoanalysts to focus on the "self system" as the

outcome of relational patterns in the child's life (eventually giving

rise to attachment theory). Atwood is definitely a lineage holder in the

tradition Sullivan created that has been expanded upon by Stolorow,

Donna Orange, and others.

Here a few quotes that I have highlighted in the text that I think are illustrative of his thinking.

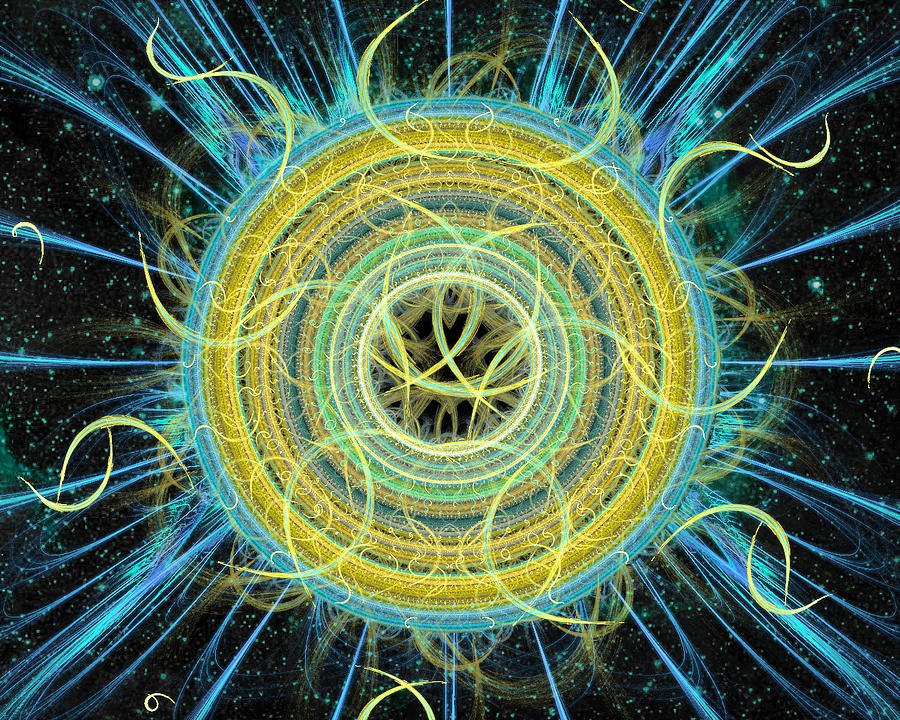

Phenomenologically, going mad is a matter of the fragmentation of the

soul, of a fall into nonbeing, of becoming subject to a sense of erasure

and annihilation. The fall into the abyss of madness, when it occurs,

is felt as something infinite and eternal. One falls away, limitlessly,

from being itself, into utter nonbeing. (p. 40)

* * * *

Madness is not an illness, and it is not a disorder. Madness is the

abyss. It is the experience of utter annihilation. Calling it a disease

and distinguishing its forms, arranging its manifestations in carefully

assembled lists and charts, creating scientific-sounding

pseudo-explanations for it--all of these are intellectually

indefensible, and I think they occur because of the terror. What is the

terror I am speaking of? It is the terror of madness itself, which is

the anxiety that one may fall into nonbeing.

The abyss lies on or just beyond the horizon of every person's world,

and there is nothing more frightening. Even death does not hold a terror

for us comparable to the one associated with the abyss. (p. 41)

He suggests that death offers a potential reunion with loved ones, or

conversely, a release or relief from the sorrows and pains of our lives.

We can rage against the dying of the light, or marvel at our capacity

to contemplate our own demise, or even imagine the world without us.

But the descent into madness, into the abyss, offers no such relief.

It is the end of all possible responses and meanings, the erasure of a

world in which there is anything coherent to respond to, the melting

away of anyone to engage in a response. It is much more scary than

death, and this proven by the fact that people in fear of

annihilation--the terror of madness--so often commit suicide rather than

continue with it. (p. 42)

* * * *

People often fall not because the bad happens, but rather because the

good stops happening. Sanity is sustained by a network of validating,

affirming connections that exist in a person's life: connections to

other beings. If those connections fail, one falls. The beings on whom

one relies include, obviously, other people, sometimes animals, often

beings known only through memory and creative imagination. It some

instances it is the connection to God that protects a person against

madness. Strip any person of his or her sustaining links to others, and

that person falls. No one is immune, because madness is a possibility of

every human life. (p. 43)

* * * *

What a person in the grip of annihilation needs, above all else, is

someone's understanding of the horror, which will include a human

response assisting in the journey back to some sort of psychological

survival. A person undergoing an experience of the total meltdown of the

universe, when told that his or her suffering stems from a mental

illness, will generally feel confused, invalidated, and undermined.

Because there are no resources to fight against such a view, its power

will have a petrifying effect on subjectivity and deepen the fall into

the abyss. (p. 45)

Atwood contends that an objectified psychiatric diagnosis is the

antithesis of what is needed - essentially mirroring and validation. He

offers a thought experiment: Imagine a young man, maybe in his early

20s, who is in the midst of a fall into the abyss. This young man finds

himself committed to an in-patient psych ward where he is given the

diagnosis of a brain disease called schizophrenia.

The annihilating impact of such a view then becomes symbolized in the

patient's unfolding experience that vicious, destructive voices are

speaking to him over invisible wires and saying repeatedly that he

should die. In this way a spiraling effect occurs, wherein the operation

of the medical model further injures the already devastated patient,

whose reactions to the new injuries in turn reconfirm the correctness of

the diagnosis. (p. 45-46)

He prefers to be with the client in whatever space they inhabit, to show

them that he is listening and trying to comprehend their experiences as

much as he is able - and, above all else, that he is prepared to do

whatever is necessary to help.

I have had clients in the past who I felt unable to help, because I was

unable to be with them in their abyss, to extend my empathy into what I

experiences as their delusional states. I failed them. Even as I sat

with them and tried to understand what they were telling me, I did not

understand that their delusions were their psychic organizing

principles, were their symbolic truth of how the world has betrayed

them.

Atwood, in the many case studies he presents, is revealed as someone who

can feel into the annihilation his clients present him with, but he

also acknowledges how challenging it is:

Working in the territory of annihilated souls is never easy. To really

listen to someone, anyone, to hear the depth of what he or she may have

felt, to work one's way into realms of experience never before perceived

by anyone and therefore never articulated--all of this is as hard a

task as one may undertake. (p. 51)

It is indeed. And it is also rewarding when the therapist can do so

successfully and allow the client to feel heard and validated - maybe

for the first tine in their lives.

I want to wrap up this post with a few more passages that deal with etiology. I posted some thoughts recently on

a more relationally based diagnostic manual for counselors and therapists - Atwood conceptualizes cases in a way that fits with what I would like to see.

Those who feel they are not present, and who affirm the existence of

machine that controls their minds and bodies, are often the products of

profound enmeshment with their caregivers in childhood. An accommodation

has taken place at a very young age in which the agenda of the

caregiver--it can be the mother, the father, or both--becomes the

supreme principle defining the child's developing sense of personal

identity. The experience of the child as an independent person in his or

her own right is nullified, so that they child the parents wish for can

be brought into being. Very often thee are no outward signs of anything

amiss, as family life unfolds in a seeming harmony. Somewhere along the

way, however , the false self begins to crumble, and a sense of the

degree to which the child has been absent from life arises. This

emerging sense of never having been there, of having been controlled and

regulated by outside forces, is so unstable and fragmentary that it is

given concrete form. What is seen from the viewpoint of others as a

delusion then begins to crystallize, for example in the image of an

influencing machine (Tausk, 1917; Orange et al., 1997, chap. 4). Within

the world of the child, now perhaps chronologically an adult, the

so-called delusion is a carrier of truth that has up until then been

entirely hidden and erased. What looks like a breakdown into psychosis

and delusion thus may represent an attempted breakthrough, but the

inchoate "I" does require an understanding and responsive "Thou" in

order to have a chance to consolidate itself. (p. 60-61)

That last sentence is the essence of the relational model - we are

relational beings, the damage to our sense of self that we experience is

nearly always relational, and if there is to be healing of that damage,

that too much be relational - it requires mirroring, validation, and

the sense of human connection that is vital to sanity for all of us.

Download PDF Full-Text

Download PDF Full-Text